Resources Lesson 1: Evaluation of Interventions

The Evaluation of Interventions course module on the Peoples-uni master's programme introduces the topic of evaluation as follows:

"While there are many definitions of the term evaluation, probably the most frequently given definition is "evaluation is the systematic assessment of the worth or merit of some project, program or policy". Generally speaking the purpose of evaluation is to produce useful information for accountability and learning purposes and to help stakeholders make decisions. Evaluation can be applied to the field of public health in relation to "almost any organized public health activity including direct service interventions, community mobilization efforts, research initiatives, surveillance systems, policy development activities, outbreak investigations, laboratory diagnostics, communications, infrastructure building projects, training and educational services and administrative systems" (Centre for Disease Control Framework). To enhance your general understanding of evaluation and how it differs from other types of learning and accountability activities please read the following:

Pages 1-11 of the “Introduction to Program Evaluation for Public Health Programs: A Self Study Guide" U.S. Department of Health and Human Services. Centers for Disease Control and Prevention (CDC). Office of the Director, Office of Strategy and Innovation. Introduction to program evaluation for public health programs: A self-study guide. Atlanta, GA: Centers for Disease Control and Prevention, 2005.

A Framework for Program Evaluation by CDC is also an important resource.

The introduction to evaluation section on the Research Methods Knowledge Base website: Introduction to Evaluation - by William Trochim hosted by https://conjointly.com/kb/."

Other taxonomies are described below:

The BetterEvaluation Rainbow Framework organises different evaluation options (methods and strategies) in terms of different tasks in an evaluation, organised into seven clusters. Please browse through - it makes evaluation come alive and supports learners through the steps thinking about the theory of change in their programme. You may find it useful if you do want to evaluate a programme.

When thinking about indicators, consider what makes a good indicator - what is the definition and how will you measure it? A neat acronym for developing good indicators is "SMART" - Specific, Measurable, Achievable, Realistic, Timely. An example of this would be "under 5 mortality per 1,000 population per year" - it's specific, you can measure it, you can achieve a reduction in that indicator with your programme so it's achievable and realistic, and you'll be able to collect data on in in the time-frame you need so it's timely.

A simple structure, process, outcome model should include:

1. Inputs/Structure (staff, buildings, systems, policies)

2. Process

- Effectiveness (access and quality for the whole population)

- Equity (access and quality for marginalised groups; participation and accountability)

- Efficiency (adequacy of funding; costs and productivity; administrative efficiency)

3. Outcome

- Effectiveness (health outcomes; patient satisfaction)

- Equity (health outcomes for disadvantaged groups)

- Efficiency (cost-effectiveness of the whole programme)

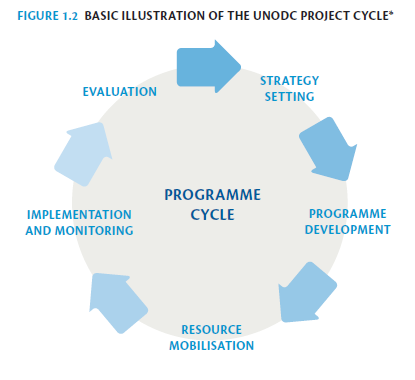

Evaluation in the project cycle

There are many different ways to think about the 'project cycle'. Here is an example from the United Nations Office on Drugs and Crime Evaluation in the Project/Programme cycle which includes these graphics below and makes the point that "Evaluation is often seen as an "end of project cycle" exercise. Evaluation, however, plays a distinct role at all stages of the project cycle."

From Evaluation Handbook, United Nations Office of Drugs and Crime, Vienna 2017.

At this stage, you might want to look again at A Framework for Program Evaluation, where the graphic you will see in it repeats the notion of the cyclical approach to evaluation.

From Centers for Disease Control and Prevention (CDC)

Case study: Evaluation of Non-Communicable Disease (NCD) interventions.

The paper Planning and evaluating population interventions to reduce noncommunicable disease risk – reconciling complexity and scientific rigour? outlines a number of the issues to consider in the evaluation of interventions, using NCD as an example. This is a comprehensive approach, and concludes: "We need to focus on evaluations that provide meaningful evidence on the population-wide impact of NCD prevention efforts. Evaluations have to be customised to match the intervention types, and be capable of local adaptation. The first stage of comprehensive planning should identify elements to be pursued and the evaluation metrics required for each component. In the overall program, there can be more rigorous evaluation of subcomponent interventions, but greater attention should be paid to the external validity of the subcomponents, and relevance for scaling up to the population. Population surveillance systems should form the endpoint methods for assessing impact – that is, assessing the net sum of the prevention efforts in a population health program rather than attempting to assign causal influences to any specific component. Some progress has been made in developing methods for complex interventions in complex systems, but significant challenges remain. What is clear is that the best evaluation designs combine different research methodologies, both quantitative with qualitative, to produce a diverse range of data that will optimally support both the integrity of the study and its external validity."

The paper includes the following figure which describes the evaluation process and issues

Figure 1. Stages in the evaluation of complex NCD prevention programs: integrating health promotion and epidemiological methods. From Planning and evaluating population interventions to reduce noncommunicable disease risk – reconciling complexity and scientific rigour? by Bauman and Nutbeam in Public Health Research and Practice. Licensed under CC BY NC SA 4.0.

The paper includes the following figure which describes the evaluation process and issues

Figure 1. Stages in the evaluation of complex NCD prevention programs: integrating health promotion and epidemiological methods. From Planning and evaluating population interventions to reduce noncommunicable disease risk – reconciling complexity and scientific rigour? by Bauman and Nutbeam in Public Health Research and Practice. Licensed under CC BY NC SA 4.0.

Figure 1. Stages in the evaluation of complex NCD prevention programs: integrating health promotion and epidemiological methods. From Planning and evaluating population interventions to reduce noncommunicable disease risk – reconciling complexity and scientific rigour? by Bauman and Nutbeam in Public Health Research and Practice. Licensed under CC BY NC SA 4.0.